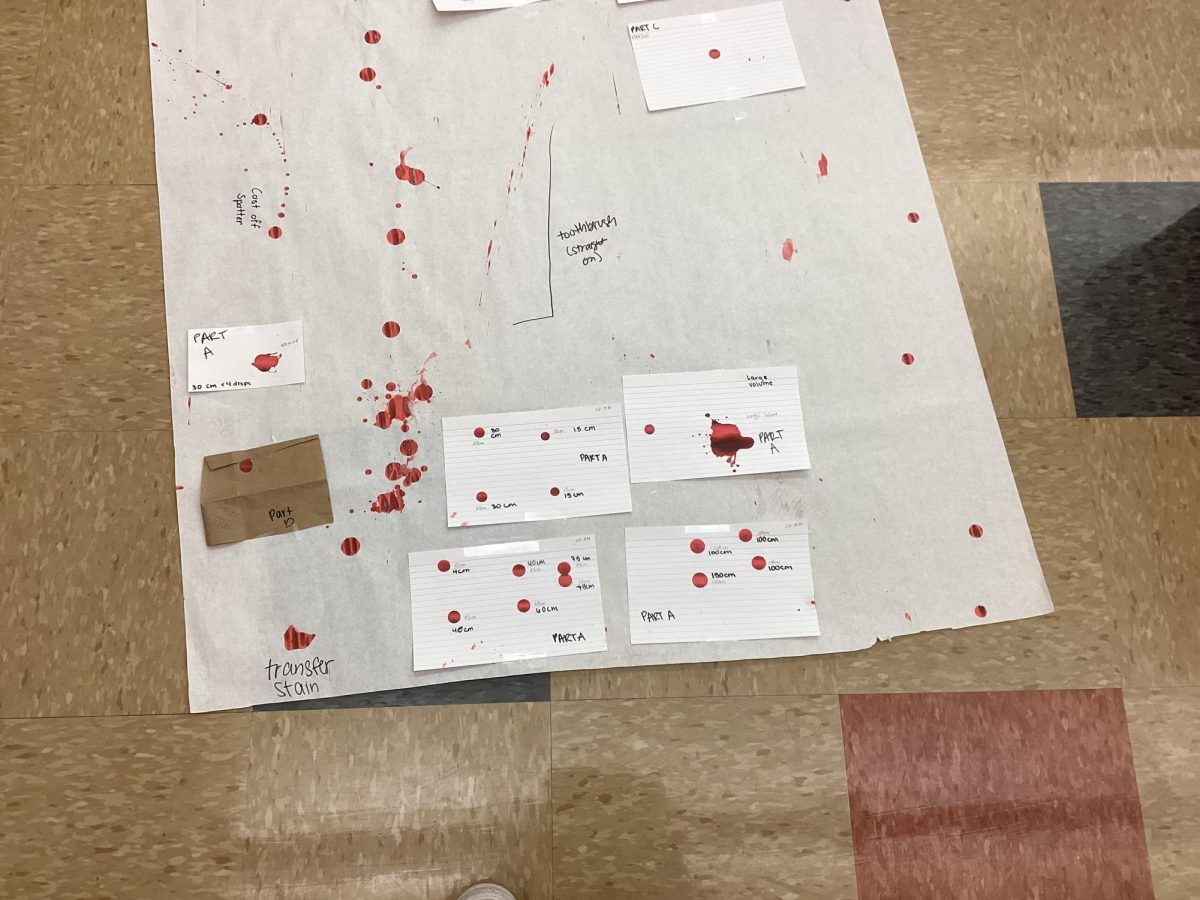

The use of AI in the production of art pieces is rising with companies like Midjourny stealing art from established artists.

The integration of AI in this new age of technology has certainly been an interesting and confusing ride so far—especially since AI seems to be nearly everywhere now. Companies like Microsoft, Amazon, and Apple are using AI to assist their users, and throughout the web, people are being presented with advertisements made specifically from AI. This technology has been shown to be useful, but there are a plethora of cons quickly arising.

Junior Eleana Locatelli ‘25 said “I think it’s a little scary how fast its [technology] growing.”

AI art has been a very controversial topic in the art community ever since its early code—artists either use it to help create ideas or despise it with a burning passion, and recently more people are feeling the latter. AI art has become so advanced and accurate that it is hard to tell if it was made by a real person or by a program.

So, how can AI programs make such accurate art? Programmers use art made by humans to train the AI to replicate and use that specific style of art, allowing anyone to create beautiful pieces of the same nature.

“I don’t think it’s art at all,” Marco Molina ‘26 said, “I say this because it’s just an algorithm stealing other people’s art and putting it through a machine.”

This way of training AI is one of the reasons why artists have come to dislike AI art—especially since there is a very high chance of an AI company using their art without permission to train their program. Though this may have little effect to any other person, it is financially and professionally hurtful to the artists who spent hours on a piece only for it to be replicated by AI. This has been a big problem recently, especially with the recent lawsuit against AI company, Midjourny, who did that exact thing.

Molina said, “I think it’s very detrimental to our [art] community since it will take a lot of the creativity from artists.”

Midjourny was known as a self-made AI company where they provide programs that allow people to generate AI art through a written prompt made by the user. Though this lawsuit against this company did open on Jan. 13th, 2023, it was initially closed in October, and has recently reopened due to a leaked Google sheet that held a 28-page list of 16 thousand unconsenting artists who Midjourny had used their art to train their AI program. This caused a huge uproar among the art community, and artists who were on the list and those who weren’t were confronting Midjourny about their way of teaching their AI.

Illustrator and video game artist, John Lam who originally exposed Midjourny said in his tweet “Prompt engineers, your ‘skills’ are not yours,” (Twitter Link) summarizing the overall opinion and distaste people have for Midjourny’s actions.

With all the advancements in AI, it makes people question how much is being stolen. Not just in art, but in writing, music, photography, etc—what’s made by people and what’s made by AI? It’s not just famous art that’s stolen, it could be a student of Bishop Gorman whose art gets stolen.

To help prevent people’s art from being stolen, art articles reporting on the situation mention sites like Glaze, a program the University of Chicago developed, and haveibeentrained.com to help protect art, detecting if it has been used and/or stolen from AI programs.

The world is changing rapidly and technology is a big part of that, but do we want to risk being replaced by AI to create our art?